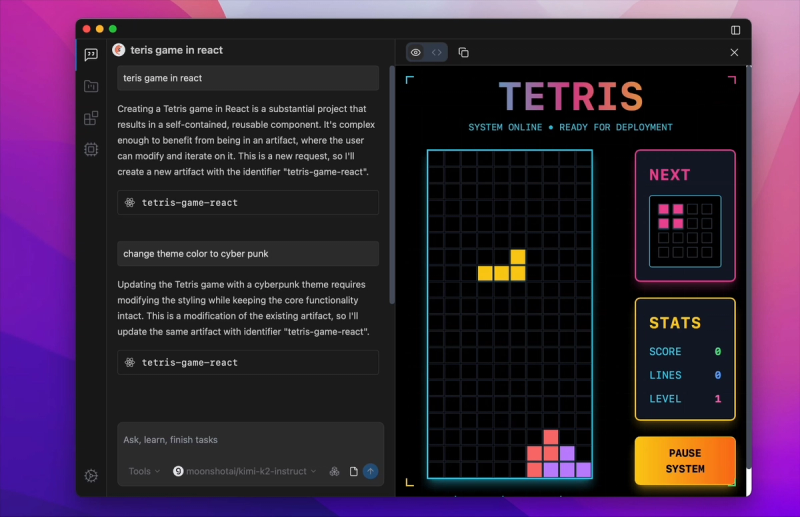

ChatFrame is a cross-platform desktop chatbot application designed to unify access to multiple Large Language Model (LLM) providers. It supports the Model Context Protocol (MCP) and offers built-in retrieval-augmented generation (RAG) capabilities for local files, ensuring users maintain full control over their data without relying on third-party services.

Key Features:

- Unified Interface: Interact with various LLM providers such as OpenAI, Anthropic, GoogleAIStudio, Qwen, and more, all within a single polished app.

- MCP Support: Compatible with SSE, Streamable HTTP, and Stdio MCP servers, allowing integration of custom tools at runtime. For example, users can configure a Postgres MCP server with a local runtime environment.

- Local RAG Implementation: Easily turn PDFs, text files, markdown documents, or code files into searchable context by uploading them into workspaces and building vector indexes locally.

- Multimodal Chat: Supports text and image inputs, with the ability to render interactive artifacts like React components, HTML, SVG, or code snippets in real time.

- Privacy-Focused: No data is uploaded to external servers, ensuring privacy and security of user information.

- Customizable Providers: Users can add API keys for supported providers or configure custom OpenAI-compatible endpoints.

- Cross-Platform: Available for macOS (both Apple Silicon and Intel) and Windows (x86_64).

Quick Start:

- Download the app from chatframe.co.

- Add your API keys via the Providers section and verify the configuration.

- Start chatting by clicking the Chat button.

Technology Stack:

Built using Tauri for the desktop framework and the Vercel AI SDK for LLM interactions.

Advanced Features:

- Proxy support for routing all LLM API requests through a specified proxy.

- Keyboard shortcuts for creating new chats (⌘N) and toggFling the sidebar (⌘B).

- Automatic background updates for a seamless experience.